1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

| for i in range(iters_num):

batch_mask = np.random.choice(train_size, batch_size)

x_batch = x_train[batch_mask]

t_batch = t_train[batch_mask]

grad = network.gradient(x_batch, t_batch)

for key in ('W1', 'b1', 'W2', 'b2'):

network.params[key] -= learning_rate * grad[key]

loss = network.loss(x_batch, t_batch)

train_loss_list.append(loss)

if i % iter_per_epoch == 0:

train_acc = network.accuracy(x_train, t_train)

test_acc = network.accuracy(x_test, t_test)

train_acc_list.append(train_acc)

test_acc_list.append(test_acc)

print("train acc, test acc | " + str(train_acc) + ", " + str(test_acc))

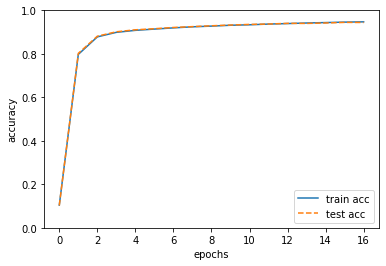

train acc, test acc | 0.10441666666666667, 0.1028

train acc, test acc | 0.7974333333333333, 0.8023

train acc, test acc | 0.87805, 0.8812

train acc, test acc | 0.8989333333333334, 0.9015

train acc, test acc | 0.90805, 0.9106

train acc, test acc | 0.9139166666666667, 0.9156

train acc, test acc | 0.9194166666666667, 0.9213

train acc, test acc | 0.9242833333333333, 0.9252

train acc, test acc | 0.9275666666666667, 0.9291

train acc, test acc | 0.9314833333333333, 0.9324

train acc, test acc | 0.9336333333333333, 0.9354

train acc, test acc | 0.93695, 0.9371

train acc, test acc | 0.9392333333333334, 0.9403

train acc, test acc | 0.9419, 0.9399

train acc, test acc | 0.94385, 0.9419

train acc, test acc | 0.9459333333333333, 0.9447

train acc, test acc | 0.9472, 0.9443

|